The Ultimate Software

Machine Learning and Intelligence

Pedro Domingos

Professor

Paul G. Allen School of Computer Science & Engineering

University of Washington

About the Lecture

Machine learning is the automation of discovery. With it, computers can program themselves instead of having to be programmed by us. Learning systems are widely used in science, business and government, but are still shrouded in mystery. This lecture will explain the five major paradigms in machine learning – symbolic learning, deep learning, genetic algorithms, Bayesian learning and reasoning by analogy. And it will provide samples of some of the major applications they enable, from automated biology to personalized recommendations. The lecture will conclude with a look at the future of what machine learning will bring, and roadblocks, dangers, and opportunities on that will come with that future.

About the Speaker

Pedro Domingos is a professor of computer science and engineering at the University of Washington, interested in data science, machine learning and artificial intelligence. He helped start the fields of statistical relational AI, data stream mining, adversarial learning, machine learning for information integration, and influence maximization in social networks.

Pedro is on the editorial board of Machine Learning, is past associate editor of Journal of Artificial Intelligence Research, and co-founder of the International Machine Learning Society. He is an author on over 200 technical publications and is the author of “The Master Algorithm” a lay book about machine learning and AI. He writes widely on AI and related subjects for the media, including the Wall Street Journal, Spectator, Scientific American and Wired.

Among other honors, Pedro has received the SIGKDD Innovation Award and the IJCAI McCarthy Award, two of the highest honors in data science and AI. He is Fellow of the AAAS and AAAI,

Pedro earned his undergraduate degree from IST of the Technical University of Lisbon in Portugal, and an MS and PhD in Information and Computer Science at UC-Irvine.

Minutes

On September 10, 2021, by Zoom videoconference broadcast on the PSW Science YouTube channel, President Larry Millstein called the 2,444th meeting of the Society to order at 8:02 p.m. EDT. He announced the order of business, summarized the annual report of the Society, and welcomed new members. The Recording Secretary then read the minutes of the previous meeting.

President Millstein then introduced the speaker for the evening, Pedro Domingos, Professor at the University of Washington, Paul G. Allen School of Computer Science & Engineering. His lecture was titled, “The Ultimate Software: Machine Learning and Intelligence.”

Domingos began by asking, “where does knowledge come from?” Until recently, the answer has been: evolution, experience, and culture. But now, Domingos said, computers are poised to discover more knowledge than has existed before.

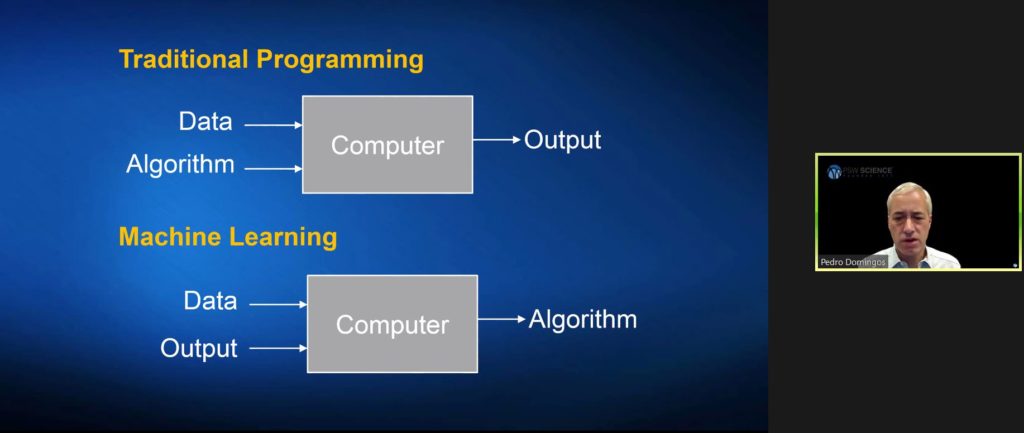

He explained that traditional programming inputs data and an algorithm into a computer to produce an output, whereas machine learning inputs data and the desired output to produce an algorithm. Algorithms produced in this way can be termed “master algorithms” because they can be used to create other algorithms.

Domingos then explained how the machine learning process works. He said there are “five tribes” of machine learning. Each “tribe” has its own master algorithm, which he then individually described.

Symbolists use inverse deduction, which Domingos described as the “most computer science-y” of the approaches to machine learning. This method fills in the gaps of existing knowledge to produce new algorithms, and recently discovered a new malaria drug. Critics of this approach have historically been connectionists. Those critics argue that inverse induction is too artificial, and that real discoveries are made in life, not on paper.

Connectionists use backpropagation, short for “backward propagation of errors,” an approach designed to understand the algorithm by which the human brain works. Three connectionists, Geoff Hinton, Yann LeCun, and Yoshua Benigo recently received the Turing Award for their work on “deep learning,” which the speaker said is the “modern re-branding” of connectionism. He then described how connectionists create artificial neural networks and use backpropagation to calculate the gradient of an error function with respect to the neural network’s weights. This approach is used in search engines, and other applications that recommend user solutions.

Evolutionaries criticize connectionism for learning only from the weights of a rigid architecture, and instead ask where the architecture derived. Evolutionaries use an advanced form of genetic algorithm called genetic programming, to simulate evolution. Genetic programming is a technique of evolving programs, starting from a population of “unfit” programs, fit for a particular task by applying operations analogous to natural genetic processes to the population of programs.

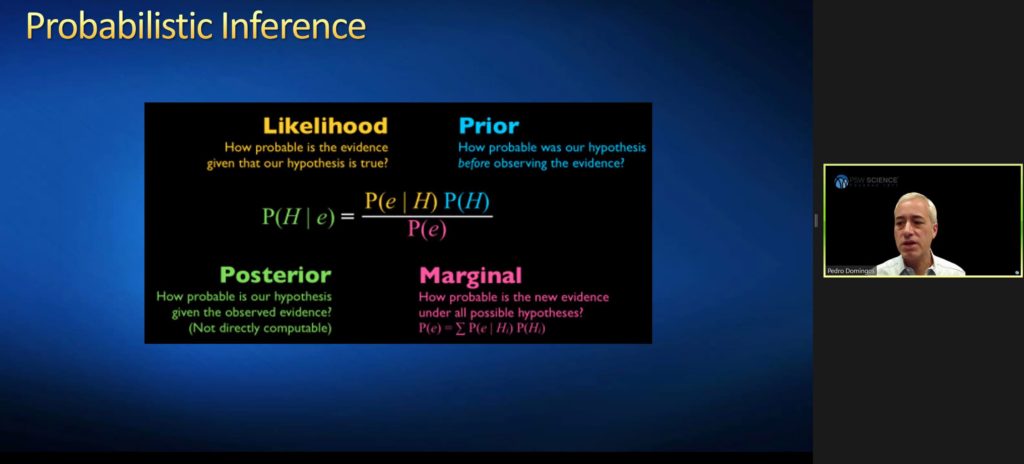

Bayesians criticize biologically inspired approaches as potentially too random, and instead advocate for “base theorem,” which uses probabilistic inference to systematically reduce uncertainty. Probabilistic inference takes statements as inputs and produces a conclusion where the inference itself is probabilistic. This approach is used for self-driving cars and spam filters, and is becoming more popular.

Analogizers use kernel machines to notice similarities between new and old. Domingos said this approach is often the easiest for a lay audience to understand. He explained the approach’s “nearest neighbor” algorithm which can be used to determine any function in the world. But, the speaker said the approach is limited in its ability to produce high-resolution outputs. This limitation makes the approach suitable for recommending products to consumers, and less so for mapping minefields.

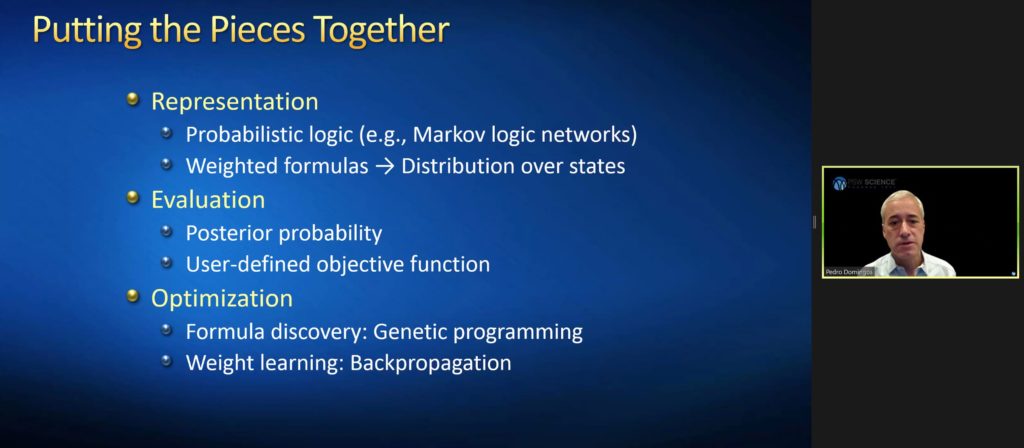

Domingos said that while each of the machine learning tribes thinks its own approach is best, he sees problems and limitations with each. Domingos said we need one single algorithm that is not subject to any problems or limitations, a true “master algorithm.” To that end, he said that all five existing approaches can be reduced to representation, evaluation, and optimization. He then explained why, once so reduced, the approaches can be synthesized.

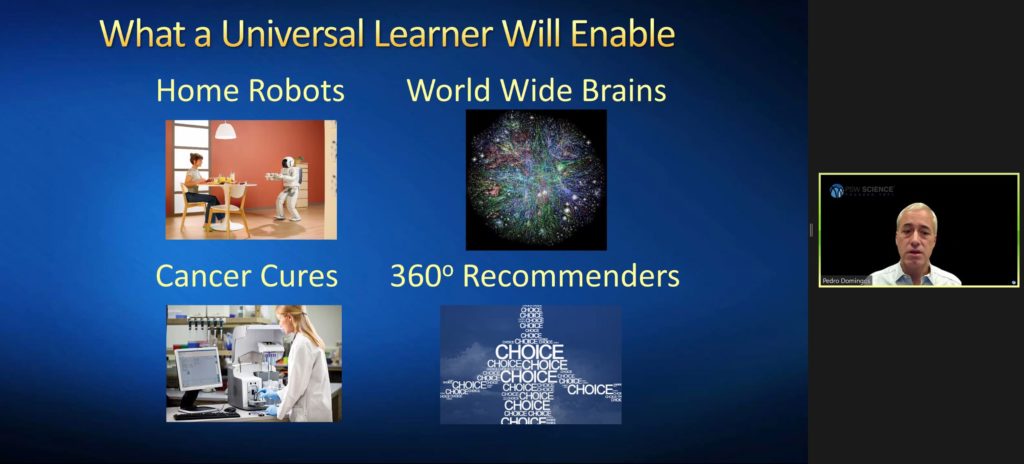

If a universal learner is achieved, Domingos believes machines will be able to accomplish home robots, worldwide brains, cancer cures, and 360º recommenders. But even then, Domingos believes there will be more work to do. He said that work will likely arise from people outside the machine learning field.

The speaker then answered questions from the online viewing audience. After the question and answer period, President Millstein thanked the speaker, made the usual housekeeping announcements, and invited guests to join the Society. President Millstein adjourned the meeting at 9:54 p.m.

Temperature in Washington, D.C.: 22° C

Weather: Clear

Concurrent Viewers of the Zoom and YouTube live stream, 81 and views on the PSW Science YouTube and Vimeo channels: 199.

Respectfully submitted,

James Heelan, Recording Secretary